Why GPT Alone Won’t Cut It for Real Document Extraction

A practical look into the limits of LLMs in Document Intelligence

At anyformat, our mission is simple: turn any file into the data you need.

Large Language Models like GPT have transformed how we interact with text. They summarize, translate, and generate content with impressive fluency.

But when it comes to turning real-world documents — invoices, delivery notes, project plans — into reliable, structured data, the idea of “just using GPT” collapses quickly.

🧩 Real Documents Are Complex — And That Matters

Real documents are not just plain text. They combine:

- paragraphs

- visual hierarchies

- stamps and signatures

- tables

- diagrams and figures

- mixed layouts

Trying to extract everything in a single model pass (one-shot extraction) often breaks in subtle and unpredictable ways.

Why?

Because the model must simultaneously perform layout understanding, semantic interpretation, and structure preservation — and even minimal noise can derail the output.

🧪 Can GPT Do OCR? Yes. Can You Rely On It? Sometimes.

Every robust document pipeline starts with one essential step:

OCR — turning a PDF or scan into machine-readable text (Markdown/HTML).

Multimodal LLMs can perform OCR, but in practice, we consistently observe:

- Minor layout noise → major misinterpretations

- Low-contrast scans → merged or partially missing text blocks

- Tables and figures → flattened or misread as prose

- Visual context → ignored

And the key issue:

🔄 When OCR is flawed, every downstream extraction step is doomed.

A table misread as a paragraph? Impossible to parse.

A lost header? The semantic structure collapses.

An incorrect number? Business logic breaks.

These aren’t edge cases — they’re dealbreakers for companies trying to automate workflows with LLMs alone.

📉 Tables: The Silent Breaker of Document Pipelines

Tables compress multidimensional meaning into layout-dependent structures.

Their interpretation depends not just on text but on:

- row and column positions

- headers

- merged cells

- implicit structural cues

Even strong LLMs frequently fail to:

- 🔄 Maintain structure — rows disappear, headers merge

- 🧱 Preserve layout — ambiguous formatting becomes prose

- ⚠️ Ensure consistency — near-duplicate tables produce different outputs

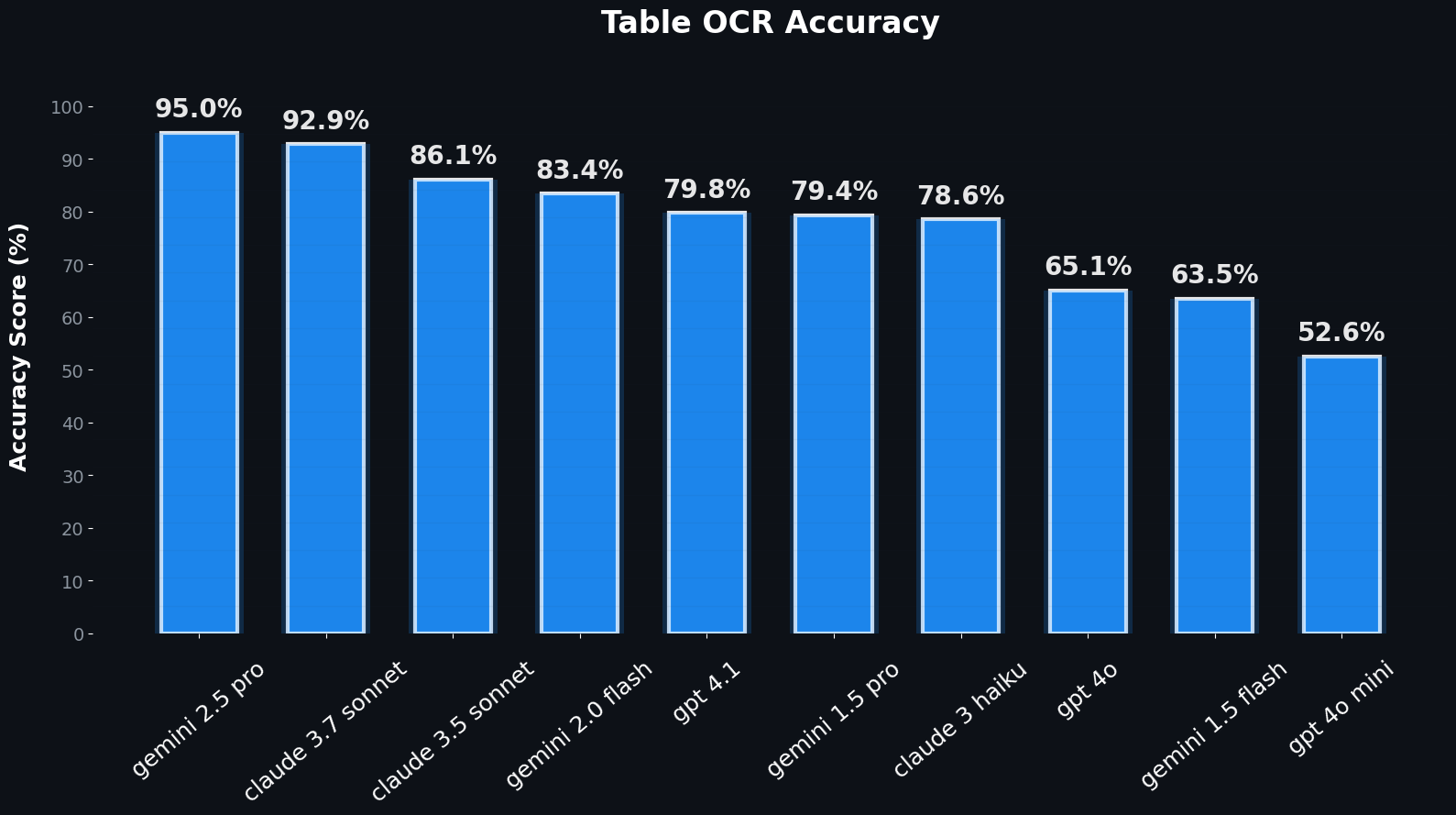

📊 Benchmark: Table Accuracy Across LLMs

We evaluated ~1,130 tables from 1,000+ real documents, derived from the GetOmni OCR Benchmark [1].

The dataset is immensely valuable (thank you GetOmni!), but not perfect:

- Repetitive layouts reduce diversity and may inflate performance

- Mixed table formats (Markdown + HTML) complicate evaluation

In our research, many failures originate before extraction — in the Markdown parsing step. Markdown simply cannot represent complex table structures.

At anyformat, we manually validated and corrected ground-truth HTML to ensure consistent evaluation.

We compared predicted HTML tables using normalized edit distance [2].

Below is an example of our one-shot table extraction accuracy across LLMs:

Figure 1: One-shot extraction table OCR accuracy across various LLMs, using normalized edit distance between predicted and GT HTML.

Key takeaways:

- Accuracy varies sharply across models

- Smaller models frequently underperform

- Some large models (like Gemini Pro) excel — but may be overkill

So how do you maximize precision without blowing your cost envelope?

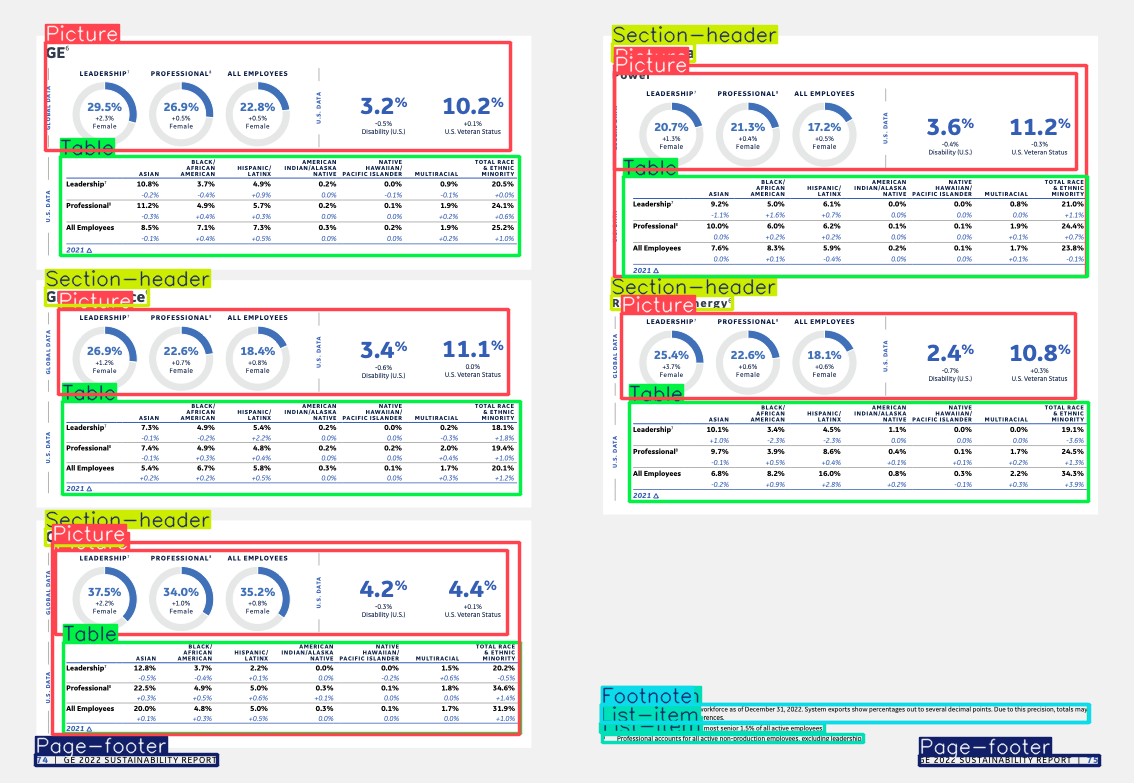

🛠️ Our Approach: Decompose Before You Extract

At anyformat, we avoid brittle, monolithic model calls.

Instead, our AI agent orchestrates the extraction, decomposing each document into its constituent parts.

Our structure-aware, multi-stage pipeline includes:

- Pre-OCR enhancement

Contrast, denoising, DPI improvements - Semantic segmentation

Identifying paragraphs, tables, figures, footnotes - Critical element detection

Stamps, signatures, visual markers - Routing to specialized extraction routines

Using tailored LLM prompts per element type - Validation and reconciliation

Postprocessing, consistency checks, error correction

This decomposition ensures:

- Noise and layout artifacts are filtered early

- Hallucinations decrease

- The model’s task is constrained and reliable

Even general-purpose LLMs perform far better once the extraction task is properly framed.

Figure 2: Example of a complex document layout with multiple interacting elements — demonstrating why segmentation is crucial.

🛠️ From DIY to Done-for-You

Some teams try to build their own LLM-powered extraction pipelines.

A few succeed — after months of experimentation, engineering, and painful QA.

Most teams don’t have that time.

anyformat isn't a service. It's a product.

A production-grade extraction engine that works from day one.

You don’t need to build your own document intelligence stack.

You need one that works.

🚀 Ready to See It in Action?

Let us test anyformat on your documents.

See what accurate extraction looks like.

📧 info@anyformat.ai

🌐 https://www.anyformat.ai

🧾 Methodology Summary

- Documents analyzed: 1,000+ real-world files [1]

- Tables evaluated: ~1,130, with manually validated HTML

- Metric: Normalized edit distance [2]

- Models: Claude 3.7 Sonnet, Claude 3.5 Sonnet, Claude 3 Haiku, Gemini 1.5 Pro, Gemini 1.5 Flash, Gemini 2.0 Flash, Gemini 2.5 Pro, GPT-4o, GPT-4o mini, GPT-4.1

📚 Bibliography

[1] getOmni.ai. (2024). OCR Benchmark Dataset. Hugging Face.

[2] Zhong, Xu, et al. "Image-based table recognition: data, model, and evaluation." ECCV. Springer, 2020.